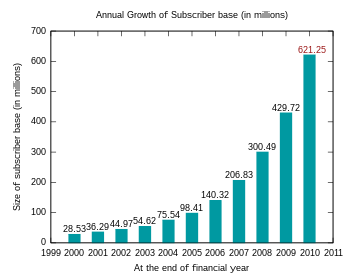

정규 분포

Normal distribution| 확률밀도함수  빨간색 곡선은 표준 정규 분포입니다. | |||

| 누적분포함수  | |||

| 표기법 | |||

|---|---|---|---|

| 파라미터 | R\ \ \ {} 평균(위치) 2 0 \ \ display ^ {\ \ {} _ { 0} = 분산 (표준 척도) | ||

| 지지하다 | |||

| CDF | |||

| 양분위수 | |||

| 의미하다 | |||

| 중앙값 | |||

| 모드 | |||

| 분산 | |||

| 미친 | |||

| 왜도 | |||

| 예: 첨도 | |||

| 엔트로피 | |||

| MGF | |||

| CF | |||

| 피셔 정보 |

| ||

| 쿨백-라이블러 발산 | |||

| 통계에 관한 시리즈의 일부 |

| 확률론 |

|---|

|

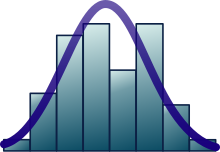

통계학에서 정규 분포(가우스, 가우스 또는 라플라스-가우스 분포라고도 함)는 실수치 랜덤 변수의 연속 확률 분포의 한 종류입니다.확률 밀도 함수의 일반적인 형태는 다음과 같습니다.

μ {\는 분포의 평균 또는 기대치(및 중앙값과 모드)이며 파라미터 {\ \는 분포의 표준 편차입니다.분포의 분산은 ^{[1] 이며, 가우스 분포를 갖는 랜덤 변수는 정규 분포라고 하며 정규 편차라고 합니다.

정규 분포는 통계에서 중요하며 자연 및 사회 과학에서 분포를 [2][3]알 수 없는 실제 값 랜덤 변수를 나타내기 위해 자주 사용됩니다.이들의 중요성은 부분적으로 중심 한계 정리 때문이다.일부 조건에서, 유한 평균과 분산을 갖는 랜덤 변수의 많은 표본(관측치)의 평균은 그 자체로 랜덤 변수이며, 표본의 수가 증가함에 따라 분포가 정규 분포로 수렴된다는 것을 명시하고 있다.따라서 측정 오류와 같이 많은 독립 공정의 합이 될 것으로 예상되는 물리적 수량은 정규 [4]분포에 가까운 분포를 갖는 경우가 많습니다.

더욱이, 가우스 분포는 분석 연구에서 중요한 몇 가지 고유한 특성을 가지고 있다.예를 들어, 정규 편차 고정 집합의 선형 조합은 정규 편차입니다.불확실성의 전파 및 최소 제곱 매개변수 적합과 같은 많은 결과와 방법은 관련 변수가 정규 분포를 따를 때 명시적인 형태로 분석적으로 도출될 수 있다.

정규 분포를 비공식적으로 종곡선이라고 [5]부르기도 합니다.그러나 다른 많은 분포는 종 모양입니다(예: Cauchy, Student's t 및 로지스틱 분포).

일변량 확률 분포는 다변량 정규 분포의 벡터와 행렬 정규 분포의 행렬에 대해 일반화됩니다.

정의들

표준 정규 분포

가장 간단한 정규 분포의 경우를 표준 정규 분포 또는 단위 정규 분포라고 합니다.이는 μ =및 \)인 특수한 경우로, 다음과 같은 확률 밀도 함수(또는 밀도)로 설명됩니다.

z(\ z의 평균은 0이고 분산 및 표준 편차는 1입니다.밀도 ( ){는 z 에서 1/ { 1}}이고 z + (\ z - (\ z= 1)입니다.

위의 밀도는 표준 정규 분포로 가장 일반적으로 알려져 있지만, 일부 저자들은 정규 분포의 다른 버전을 설명하기 위해 이 용어를 사용했습니다.예를 들어, Carl Friedrich Gauss는 표준 노멀을 다음과 같이 정의했다.

Stephen Stigler는[6] 표준 노멀을 다음과 같이 정의했습니다.

2 / ( 2 )\ \ {2} =/ ( \ )。

일반 정규 분포

모든 정규 분포는 표준 정규 분포의 버전입니다. 이 정규 분포의 도메인은 {\displaystyle }( 편차)만큼 연장되고 다음으로μ {\}(평균값)로 변환됩니다.

확률 밀도는 적분이 1이 되도록 1/{\(\ 1 스케일링해야 합니다.

Z Z가 표준 정규 편차인 Z +μ(\X=\ Z는 기대값(\displaystyle ) 표준 편차(\displaystyle \displaystyle로 정규 Z(\를 나타냅니다. Z는 {\의 배수로 스케일링/신장할 수 있으며 {\라고 다른 정규 분포를 얻을 수 있습니다. 반대로X {\ X가μ {\ 및 2 \의 정규 인 ^{ 이(\ X 분포는 Z ( -) / \ Z = ( - \) /\display} 에 따라 다시 변환하여 "표준" 정규 분포로 변환할 수 있습니다.이 변형을 의 된 형태라고도

표기법

표준 가우스 분포(평균 및 단위 분산이 0인 표준 정규 분포)의 확률 밀도는 그리스 문자 {\}(phi)[7]로 종종 표시됩니다.그리스 문자 phi의 대체 형태인 phi도 꽤 자주 사용된다.

정규 분포는 N, 2){ N^{ N, 2) mu ^{[8]이라고 .따라서 랜덤 X(\ X가 μ(\ 및 표준 편차(\로 정규 분포되어 있으면 다음과 같이 쓸 수 있습니다.

대체 파라미터화

일부 저자는 편차 또는 값 대신 "\를 분포의 폭을 정의하는 파라미터로 사용할 것을 권장합니다정밀도는 보통 분산의 인1/ 1^{[9]로 정의됩니다.분포의 공식은 다음과 같습니다.

이 선택은 가 0에 매우 가까울 때 수치 계산에서 유리하며 다변량 정규 분포를 갖는 변수의 베이지안 추론 등 일부 컨텍스트에서 공식을 단순화할 수 있다고 주장됩니다.

또는 표준편차 ′ / { \^ { \ } =/ \ 의 역수를 정밀도로 정의할 수 있으며, 이 경우 정규분포의 표현은

스티글러에 따르면, 이 공식은 훨씬 간단하고 기억하기 쉬운 공식과 분포의 분위수에 대한 간단한 근사 공식 때문에 유리합니다.

정규 분포는 자연 모수 1 μ 2 \ \_ { { } { } { \ _ }} 2 2、 2 2 、 、 、 - 、 、 、 、 、 、 、 、 stat normal normal normalstylestylestyle normal normal 、 、 、 、 、 、 、 、 、 normal normal 2 2 normal normal정규 분포에 대한 이중 기대 모수는 θ1 = μ 및 θ22 = μ + µ입니다2.

누적분포함수

표준 정규 분포의 누적 분포 함수(CDF)는 보통 대문자 그리스 문자(\로 표시되며 적분이다.

관련 오류 함수 () { } ( 은 정규 분포가 [- , \ style [ - , x 의 범위에 들어가는 랜덤 변수의 확률을 나타냅니다.다음은 예를 제시하겠습니다.

이러한 적분은 기본 함수로 표현될 수 없으며, 종종 특수 함수라고 한다.그러나 많은 수치 근사치가 알려져 있습니다.자세한 내용은 아래를 참조하십시오.

두 기능은 밀접하게 관련되어 있습니다.즉,

f {\f μ {\ 및 편차 {\의 일반 정규 분포의 경우 누적 분포 함수는 다음과 같습니다.

표준 일반 CDF의 인Q ( ) - () {Q (x) =- \는 특히 엔지니어링 [10][11]텍스트에서 Q-함수라고 불립니다.그것은 확률은 표준 정상적인 무작위 변수 X의{X\displaystyle}){\displaystyle)}:P(X>)){P(X>^)\displaystyle}. Q{Q\displaystyle}-function의 Φ{\displaystyle \Phi}의 이러한 간단한 변화 다른 정의는,,를 초과한 값 또한 사용은 O를 준다ccasi온리온으로[12]

표준 정규 CDF의 그래프(\ \Phi})는 점(0,1/2)을 중심으로 2배의 회전 대칭을 갖습니다. 즉,( - ) - ( ) {(x )- \( x ) 。 반감시적분할 수 있습니다.

표준 정규 분포의 CDF는 부품별로 시리즈로 통합함으로써 확장할 수 있습니다.

큰 x에 대한 CDF의 점근팽창도 부품별 적분을 사용하여 도출할 수 있다.자세한 내용은 오류 함수 #점근 [13]확장을 참조하십시오.

Taylor 계열 근사치를 사용하면 표준 정규 분포의 누적분포함수에 대한 빠른 근사치를 구할 수 있습니다.

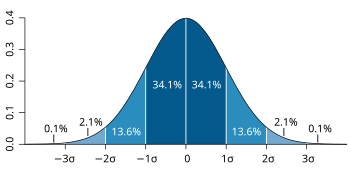

표준편차와 적용범위

정규 분포에서 추출한 값의 약 68%는 평균으로부터 1개의 표준 편차 θ 내에 있고, 값의 약 95%는 2개의 표준 편차 내에 있으며, 약 99.7%는 3개의 표준 [5]편차 내에 있습니다.이 사실은 68-95-99.7(emirical) 규칙 또는 3-시그마 규칙이라고 불립니다.

보다 정확히 말하면, 정규 편차가 - n { \ - n \ } ~ { + \ } 범위에 있을 확률은 다음과 같습니다.

유효 숫자 에서 n 1,, 6 n 2,\ldots의 은 다음과 같습니다.[14]

| OEIS | ||||||

|---|---|---|---|---|---|---|

| 1 | 0.682689492137 | 0.317310507863 |

| OEIS: A178647 | ||

| 2 | 0.95499736104 | 0.045500263896 |

| OEIS: A110894 | ||

| 3 | 0.997300203937 | 0.002699796063 |

| OEIS: A270712 | ||

| 4 | 0.99936657516 | 0.000063342484 |

| |||

| 5 | 0.9999426697 | 0.000000573303 |

| |||

| 6 | 0.999998027 | 0.0000001973 |

|

n(\ n의 경우 약 - e - 2 / / { \ \ { ^ { - n ^ { - n ^ { } / 2 } { \ { / 2 } 。

분위수 함수

분포의 분위수 함수는 누적 분포 함수의 역함수입니다.표준 정규 분포의 분위수 함수를 프로빗 함수라고 하며, 역오차 함수로 나타낼 수 있습니다.

및 이 2인 일반 랜덤 변수의 경우, 분위수 함수는 다음과 같습니다.

표준 정규 분포의 - ( ^{-은 일반적으로 p\로 표시됩니다.이 값은 가설 검정, 신뢰 구간 구성 및 Q–Q 그림에 사용됩니다.일반 랜덤 X X는 확률 - 의 μ {\(\displaystyle \를 하며, 2의 μ ± {\(\displaystyle 2p)를 벗어납니다. z 는 1.96입니다.따라서 일반 랜덤 변수는 5%의 경우에 한해 ± 1.({display을 벗어납니다.

다음 표는 X X가 지정된 p(\ p의 μ ± †(\\pm })에 하도록 계량 (\ })를 나타냅니다.이러한 값은 정규 분포(또는 점근 정규 분포)[15][16]가 있는 표본 평균 및 기타 통계 추정기에 대한 공차 구간을 결정하는 데 유용합니다. 표는 - (p ) -1 ( + 2 { { - ( p ) \^{- 위와 같이 - ^{-이 아닙니다

| 0.80 | 1.281551565545 | 0.999 | 3.290526731492 | |

| 0.90 | 1.644853626951 | 0.9999 | 3.890591886413 | |

| 0.95 | 1.959963984540 | 0.99999 | 4.417173413469 | |

| 0.98 | 2.326347874041 | 0.999999 | 4.891638475699 | |

| 0.99 | 2.575829303549 | 0.9999999 | 5.326723886384 | |

| 0.995 | 2.807033768344 | 0.99999999 | 5.730728868236 | |

| 0.998 | 3.090232306168 | 0.999999999 | 6.109410204869 |

p { p의 경우, 분위함수는 유용한 점근팽창 - ( ) - - ( - +( . \ \ Phi { - 1= - { - { \ { p } { p } { p } { p } { p } { p } } { } { p } { p } { p } { p } } } 。[17]

정규 분포는 처음 두 개를 초과하는 누적량(평균 및 분산 제외)이 0인 유일한 분포입니다.또한 지정된 평균 및 [18][19]분산에 대해 최대 엔트로피를 갖는 연속 분포입니다.Geary는 평균과 분산이 유한하다고 가정할 때 일련의 독립적 도면으로부터 계산된 평균과 분산이 [20][21]서로 독립적인 유일한 분포임을 보여 주었습니다.

정규 분포는 타원 분포의 하위 클래스입니다.정규 분포는 평균에 대해 대칭이며 실제 선 전체에서 0이 아닙니다.따라서 개인의 체중이나 주식가격과 같이 본질적으로 긍정적이거나 강하게 치우친 변수에는 적합하지 않을 수 있다.이러한 변수는 로그 정규 분포 또는 파레토 분포와 같은 다른 분포로 더 잘 설명할 수 있습니다.

x(\x)가 평균에서 몇 가지 표준 편차 이상 떨어져 있을 경우 정규 분포의 값은 사실상 0입니다(예: 3개의 표준 편차가 분포의 0.27%를 제외한 모든 범위에 해당).따라서 특이치의 유의한 부분, 즉 평균으로부터 많은 표준 편차가 떨어져 있는 값, 정규 분포 변수에 최적인 최소 제곱 및 기타 통계적 추론 방법이 이러한 데이터에 적용될 때 종종 매우 신뢰할 수 없을 것으로 예상하는 경우에는 적절한 모형이 아닐 수 있습니다.그러한 경우, 보다 엄격한 분포를 가정하고 적절한 강력한 통계 추론 방법을 적용해야 한다.

가우스 분포는 평균 또는 분산이 유한한지 여부에 관계없이 동일한 분포의 합계를 끌어당기는 안정적인 분포군에 속합니다.제한 사례인 가우스를 제외하고, 모든 안정적인 분포는 무거운 꼬리와 무한 분산을 가집니다.안정적이고 분석적으로 표현될 수 있는 확률 밀도 함수를 가진 몇 안 되는 분포 중 하나이며, 다른 분포는 코시 분포와 레비 분포입니다.

과

fμ\ 및 가의 정규분포에는 다음 속성이 있습니다.

- 분포의 모드,[22] 중위수 및 평균인 점 x , {\ x=\의 둘레는 대칭입니다.

- 단언할 수 없습니다. 첫 번째 도함수는 <,> ,\ x \, 0 은 입니다 x = \ }

- 곡선과 x 축으로 둘러싸인 영역은 통일성(즉, 1과 동일)입니다.

- 첫 번째 도함수는 ( ) - - μ f () .{ { \ } ( x ) = - { \ { - \ } { \ f ( x ) f ( ) ( x ) 。

- 밀도는두 개의 의 2차 도함수가 이고 부호가 변경됨)이 있으며, x μ -μ - ( \ x = \- \ 와 x + .{ x = \ + \ }에서 평균으로부터 한 표준 편차가 있습니다.

- 그 밀도는 통나무 오목하다.[22]

- 그 밀도는 무한히 미분 가능하며, 정말로 [23]2차 초평활화이다.

또한 표준 정규 분포의 밀도 0} = 1({ 1)도 다음과 같은 을 가진다.

- 첫 번째 도함수는 ′ ) - ( x)。{ \^{\prime }(\varphi이다.

- 두 번째 도함수는 () ( 2 -) ( ^{\ \}(x)=(입니다.

- 일반적으로 n번째 도함수는 ()( -) n()、 \ \ { ( n )( x )^{ } = { n } \{ } { ) (

- μ 및({\mu를 갖는 정규 분포 X({ \displaystyle\displaystyle \})가 특정 집합에 있을 확률은 Z -μ / { Z=(가 정규 분포를 갖는다는 사실을 사용하여 계산할 수 있습니다.

★★★

XX})의 플레인 모멘트와 절대 모멘트는 각각 p({ X}) p({^{p의 기대치입니다.μ(\가 0인 경우 이러한 파라미터를 중심 모멘트라고 하며, 그렇지 않은 경우 이러한 파라미터를 비중심 모멘트라고 합니다.일반적으로 관심 있는 것은 정수 가 p인 순간뿐입니다.

X X가 정규 분포를 갖는 , 중심 모멘트가 아닌 모멘트가 존재하며 실제 부분이 -1보다 큰 p디스플레이 p에 대해 유한합니다.음이 아닌 p {\ p의 경우 중심 모멘트는 다음과 같습니다.[25]

n {\ n}은는) ndisplaystylen.와) 패리티를 가진 n.부터1까지의 모든 의 곱을 나타냅니다.

중심 절대 모멘트는 모든 짝수 순서의 일반 모멘트와 일치하지만 홀수 순서의 경우 0이 아닙니다.음수가 아닌 p의 경우 {\ p

마지막 공식 또한 어떤non-integer p>;− 1.{\displaystyle p>, -1 유효합니다.는 언제μ ≠ 0말},{\displaystyle \mu \neq 0,}은 평야 그리고 절대적인 순간 1F1{\displaystyle{}_{1}F_{1}합류하는 초 기하 기능 면에서}와 U.{미국\displaystyle}[표창 필요한]표현될 수 있다.

이러한 표현은p\p가 정수가 아닌 에도 유효합니다.일반화 Hermite 다항식을 참조하십시오.

| ★★★ | 모멘트 심심 central central central central central | |

|---|---|---|

| 1 | ||

| 2 | ||

| 3 | ||

| 4 | ||

| 5 | ||

| 6 | ||

| 7 | ||

| 8 |

XX})가 [bdisplaystyle b])에 있는 를 조건으로 하는 X})의 예상은 다음과 같습니다.

서f {\와 F {\ F는 각각 X의 밀도 및 누적 분포 함수입니다. b {\ b=\의 경우 이를 역밀스 비율이라고 합니다.위에서는의밀도 f({X가 인버스 밀스 비율과 같이 표준 표준 밀도 대신 사용되므로, 여기서는 \이 아닌 ({displaystyle \sigma^{2})로 표시됩니다.

및

및 표준편차θ를[26] 갖는 f(\ f의 푸리에 변환은 다음과 같습니다.

서 ii는 가상 단위입니다. 0 \ 0인 경우 첫 번째 계수는 1이고, 푸리에 변환은 상수 계수를 제외하고 주파수 영역의 정규 밀도이며, 평균 0 및 1/θ 1 입니다. 특히 표준 정규 분포(\})는 고유푸입니다.푸리에 변환의 음질

확률론에서, 실수치 의 확률 분포의 푸리에 변환은 의 로 정의되는 변수 특성 함수 X () \ \ { ( )에 밀접하게 관련되어 있다.s t t푸리에 변환의 주파수 파라미터)의 함수입니다.이 정의는 복합값 t[27]t로 해석적으로 확장할 수 있습니다.둘 사이의 관계는 다음과 같습니다.

및 함수

실수 랜덤 X의 모멘트 생성 함수({X})는 실수 t t의 함수로서 t의 ({ e입니다.f{ f의 정규 분포에서는μ의 ({muyle f 및 편차)입니다 모멘트 생성 함수가 존재하며 다음과 같습니다.

적분 생성 함수는 모멘트 생성 함수의 로그, 즉

은 t{\ t의 2차 다항식이므로 첫 번째 2개의 누적수( \와 분산 2 {\^{만이 0이 아닙니다.

Stein의 방법에서 무작위 변수X ~2)의 Stein 연산자와 클래스는 ( ) f () -( - ) () \ { { ( x ) 。절대 연속 f: ( [ () < \ f : \ \ \ {} ( ) } \ { } [ ' ( ) ] < \ 。

바리안스

{f의 한계에서는 확률밀도 는 최종적으로 x μ(\ x\neq에서 0이 되는 경향이 있지만, (\=\mu의 적분이 1이면 제한 없이 증가합니다.따라서 정규 분포는 { \ }일 때 정규 분포로 정의할 수 없습니다.

단, 분산이 0인 정규분포를 일반화함수로 정의할 수 있습니다.구체적으로는 Dirac의 "" { 즉 - )로 변환된 f) ( -μ f - mu 는 CDF입니다.μ(\ 즉

대대 maximum maximum

지정된 μ \mu 및 분산 2 {\ \sigma}의 모든 확률 분포 정규 N , 2)(\[28] N^{은 엔트로피가 최대인 분포입니다.X X가 확률 f { f의 연속 랜덤 변수인 X X의 엔트로피는 다음과 같이 정의됩니다[29][30][31].

서f ( ) logf ( f f는f ( { f)=일 때 항상 0으로 간주됩니다.분포가 적절하게 정규화되어 있고 지정된 분산을 갖는다는 제약조건에 따라 이 함수를 최대화할 수 있습니다.두 개의 Lagrange 승수를 가진 함수가 정의됩니다.

서 f { f는 현재 및 displaystyle\를 갖는 밀도함수로 간주됩니다.

엔트로피가 최대일 때 f에 대한 작은 변동 f(x)\delta f는L(\ L에 대한 변동 L L을 생성합니다.

이 값은 임의의 fx)\ fx)\delta f(x f에 해결하면 다음과 같이 됩니다.

_ 및 })에 대해 푸는 제약 방정식을 사용하면 정규 분포의 밀도를 얻을 수 있습니다.

정규 분포의 엔트로피는 다음과 같습니다.

- 일부 변수 의 특성 X( \ \{X } ) ( ( { X \ ^ { ( )。서 Q ( )는 다항식입니다. Q는 최대 2차 다항식이므로 X는 정규 랜덤 변수입니다.[32]이 결과의 결과는 정규 분포가 0이 아닌 적분량의 유한한 수(2)를 갖는 유일한 분포가 된다는 것입니다.

- X와 Y Y가 함께 정상이고 상관 관계가 없는 경우 독립적입니다.X디스플레이 X와 Y Y가 함께 정상이어야 요건은 필수적입니다. X( 스타일 X)와 Y(디스플레이 스타일 Y)가 없으면 속성이 [33][34][proof]유지되지 않습니다.비정규 랜덤 변수의 경우 상관 관계가 없는 것은 독립성을 의미하지 않습니다.

- 1개의 정규 1μ 1, 의 Kullback-Leibler 발산(\ N _ _}^은 2, 2† )됩니다. 동일한 분포 간의 Hellinger 거리는 다음과 같습니다.

- 정규 분포에 대한 Fisher 정보 행렬은 대각선이며 다음 형식을 취합니다.

- 정규 분포의 평균 앞에 있는 켤레도 정규 [36]분포입니다.구체적으로는 1, n {\이가 ~ (, \ N (\, \sigma 이고, 그 이μ ~ 0 , \ 2인 , \(\ N (\disma ) _0 _0}, \ \sigma, \sigma, \sigma, \sigma)인 경우.u 은(는)

- 정규 분포군은 지수족(EF)을 형성할 뿐만 아니라 2차 분산 함수(NEF-QVF)를 가진 자연 지수족(NEF)을 형성합니다.정규 분포의 많은 속성은 NEF-QVF 분포, NEF 분포 또는 EF 분포의 속성으로 일반화됩니다.NEF-QVF 분포는 포아송, 감마, 이항 분포 및 음의 이항 분포를 포함하여 6개의 군으로 구성되며 확률과 통계에서 연구되는 많은 일반 군들은 NEF 또는 EF입니다.

- 정보 기하학에서 정규 분포 패밀리는 일정한곡률 - -1)의 통계적 다지관을 형성합니다.같은 패밀리는 (±1)-연결 () \ 및 ( ^{([37]에 대해 평탄합니다.

★★★★

한계

중심 한계 정리는 특정(공통) 조건에서 많은 랜덤 변수의 합이 거의 정규 분포를 갖는다는 것을 나타냅니다.으로는 X, (\은 독립적이고 동일한 분포의 랜덤 변수이며, 평균은 0, 분산 2 \})이며,(\ Z는 ndisplaystyle {qrt})로

그 후 n n이 하면 Z Z의 확률분포는 평균과 분산이 0인 정규분포 2(\^{가 됩니다.

이 정리는 의존도 및 분포의 모멘트에 특정 제약이 가해지는 경우 독립적이지 않거나 동일한 분포가 아닌 변수로 확장할 수 있습니다.

실제로 접한 많은 검정 통계, 점수 및 추정기에는 특정 랜덤 변수의 합계가 포함되어 있으며, 영향 함수를 사용하여 더 많은 추정치를 랜덤 변수의 합계로 나타낼 수 있다.중심 한계 정리는 이러한 통계 모수가 점근 정규 분포를 가질 것임을 암시합니다.

는 특정 수 를 들어 다음과 같습니다. 예를 들어 다음과 같습니다.

- B p는 np및 (\displaystyle np(에 대해 (\ np 및 np())로 거의 정규 분포를 따릅니다.

- 의 포아송 분포는 [38]의 큰 값에 대한 \lambda 및 "\displaystyle \lambda에서 거의 정규 분포를 따릅니다.

- 카이 제곱 분포 ( k )\ \ ^ { 2) 。k \ kvariance 2 k, 。

- Student' t t은는) 평균이 0이고 분산이 1인 경우, the { }이(가) 클 경우)

이러한 근사치가 충분히 정확한지 여부는 그러한 근사치가 필요한 목적과 정규 분포에 대한 수렴 속도에 따라 달라집니다.일반적으로 이러한 근사치가 분포의 꼬리 부분에서 정확도가 떨어지는 경우가 있습니다.

중심 한계 정리의 근사 오차에 대한 일반적인 상한이 Berry-Esseen 정리에 의해 주어지고 근사치의 개선은 Edgeworth 확장에 의해 주어집니다.

이 정리는 많은 균일한 노이즈 소스의 합을 가우스 노이즈로 모델링하는 데에도 사용할 수 있습니다.'AWGN' 참조.

하나 이상의 독립적이거나 상관된 정규 변수의 함수에 대한 확률 밀도, 누적 분포 및 역 누적 분포는 광선[39] 추적의 수치 방법(Matlab 코드)을 사용하여 계산할 수 있습니다.다음 섹션에서는 몇 가지 특별한 경우를 살펴보겠습니다.

X X가 및 분산이 ^{인 ,

- X+ (\ b (\ 의 에 대해서도, + b (\+ b 및 편차 a(\ a의 정규 분포가 됩니다. 즉, 선형 변환에서는 정규 분포 패밀리가 닫힙니다.ons(온스.

- ({X})는 로그 정규 분포를 따릅니다. eX ~ ln(N(μ, δ2)).

- X X의 절대값이 정규 분포 X~Nf(μ, θ2)을 접었습니다.μ \ 0)인 경우 이를 반정규 분포라고 합니다.

- 정규화 잔차의 절대값인 X - μ / μ는 X- / ~ 1 {\ _의 자유도를 갖는 카이 분포를 가진다.

- X/mu의 제곱은 도 X 2 / - 2~ ( 2/ 2 X _}({mu 의 비중심 카이-mu 분포입니다. (\

- 정규 x x의 로그 우도는 확률 밀도 함수의 로그일 뿐입니다.이 값은 표준 정규 변수의 스케일링 및 시프트된 제곱이므로 스케일링 및 시프트된 카이 제곱 변수로 분포됩니다.

- [a, b] 구간으로 제한된 변수 X의 분포를 잘린 정규 분포라고 합니다.

- (X - −2μ)는 위치 0 및 척도 θ의−2 Levy 분포를 가진다.

의 된 정규

- 1과 2가 2개의 독립된 정규 랜덤 변수이며, 은 1({\ 는 1 {2})입니다+ }+도 정규 [proof]분포를 따릅니다.은 +({}+\_}}, 분산은 + _입니다.

- 특히 X X와 Y Y가 평균과 이 0인 독립 정규 편차 with 2스타일 \^{인 X +( X와X -( 스타일 도 평균과 2( 스타일 X-Y)의 독립 정규 분포입니다.{\ 2^{ 이것은 편광 [40]정체성의 특별한 경우입니다

- 1 2가 와 편차(\displaystyle \sigma를 갖는 2개의 독립된 정규 편차이며 (\ a와b(\ b가 임의의 실수인 변수,또한 μ(\ 및 편차 displaystyle)를 사용하여 정규 분포도 안정적입니다(지수 2 \ \ ) 。

의 정규

1과 X({가 평균 0과 분산 1을 갖는 두 개의 독립된 표준 정규 랜덤 변수인 ,

- 이들의 합과 차이는 평균 0과 분산 2: X ± ~N ( , X_N(으로 정규 분포를 따릅니다.

- Z X ({ Z =_ {_ { )는 밀도 f ( ) - 1 () = \^ { - 1_ 0 ( )K _ { 0 (z} ( k _ )의 분포를[41] 따릅니다.이 분포는 z 0(\ z에서 이며, 특성 함수 Z ( ) ( 2) - / _)= (2})^{-2를 가지고 있습니다.

- 이 비율은 표준 Cauchy 분포를 .1/2 ~ ( ,) { } / (0, 1)

- 유클리드 1 + ({는 레일리 분포를 가진다.

의 독립된 에 대한

- 독립적인 정규 편차의 선형 조합은 정규 편차입니다.

- 1, 2, n({이 독립 표준 정규 랜덤 변수인 , 제곱합은 n n 자유도의 제곱 분포를 가집니다.

- 수단과 만약 X1, X2,…, Xn{\displaystyle X_{1},X_{2},\ldots ,X_{n}}독립심 일반적으로 분산된 확률 변수μ{\displaystyle \mu}와 변동이}서, 자신들의 샘플 평균 사용함을 입증할 수 있는 건 그 견본 표준 deviation,[42]에 상관이 없다 2{\displaystyle \sigma ^{2}σ. 바수의 정리 또는 코크란의 [43]정리입니다.이 두 수량의 비율은 자유도가인 학생의 t-분포를 갖는다.

- X_ , (\},},\m})이 독립된 정규 랜덤 변수일 정규화합계의 비율은 다음과 같습니다.

밀도 함수에 대한 연산

분할 정규 분포는 서로 다른 정규 분포의 밀도 함수의 축척 단면을 결합하고 밀도를 재스케일링하여 하나로 통합하는 측면에서 가장 직접적으로 정의됩니다.잘린 정규 분포는 단일 밀도 함수의 단면을 재스케일링한 결과입니다.

무한 나눗셈과 크라메르의 정리

임의의 양의 n(\에 대해 μ 및이 displaystyle})인 정규분포는 각각 n(\의 독립 정규편차의합이 됩니다.} 및 {^{ {n입니다이 성질을 무한 [45]나눗셈이라고 합니다.

Conversely, if and are independent random variables and their sum has a normal distribution, then both and must be normal deviates.[46]

This result is known as Cramér's decomposition theorem, and is equivalent to saying that the convolution of two distributions is normal if and only if both are normal. Cramér's theorem implies that a linear combination of independent non-Gaussian variables will never have an exactly normal distribution, although it may approach it arbitrarily closely.[32]

Bernstein's theorem

Bernstein's theorem states that if and are independent and and are also independent, then both X and Y must necessarily have normal distributions.[47][48]

More generally, if are independent random variables, then two distinct linear combinations and will be independent if and only if all are normal and , where denotes the variance of .[47]

Extensions

The notion of normal distribution, being one of the most important distributions in probability theory, has been extended far beyond the standard framework of the univariate (that is one-dimensional) case (Case 1). All these extensions are also called normal or Gaussian laws, so a certain ambiguity in names exists.

- The multivariate normal distribution describes the Gaussian law in the k-dimensional Euclidean space. A vector X ∈ Rk is multivariate-normally distributed if any linear combination of its components Σk

j=1aj Xj has a (univariate) normal distribution. The variance of X is a k×k symmetric positive-definite matrix V. The multivariate normal distribution is a special case of the elliptical distributions. As such, its iso-density loci in the k = 2 case are ellipses and in the case of arbitrary k are ellipsoids. - Rectified Gaussian distribution a rectified version of normal distribution with all the negative elements reset to 0

- Complex normal distribution deals with the complex normal vectors. A complex vector X ∈ Ck is said to be normal if both its real and imaginary components jointly possess a 2k-dimensional multivariate normal distribution. The variance-covariance structure of X is described by two matrices: the variance matrix Γ, and the relation matrix C.

- Matrix normal distribution describes the case of normally distributed matrices.

- Gaussian processes are the normally distributed stochastic processes. These can be viewed as elements of some infinite-dimensional Hilbert spaceH, and thus are the analogues of multivariate normal vectors for the case k = ∞. A random element h ∈ H is said to be normal if for any constant a ∈ H the scalar product(a, h) has a (univariate) normal distribution. The variance structure of such Gaussian random element can be described in terms of the linear covariance operator K: H → H. Several Gaussian processes became popular enough to have their own names:

- Gaussian q-distribution is an abstract mathematical construction that represents a "q-analogue" of the normal distribution.

- the q-Gaussian is an analogue of the Gaussian distribution, in the sense that it maximises the Tsallis entropy, and is one type of Tsallis distribution. Note that this distribution is different from the Gaussian q-distribution above.

- The Kaniadakis κ-Gaussian distribution is a generalization of the Gaussian distribution which arises from the Kaniadakis statistics, being one of the Kaniadakis distributions.

A random variable X has a two-piece normal distribution if it has a distribution

where μ is the mean and σ1 and σ2 are the standard deviations of the distribution to the left and right of the mean respectively.

The mean, variance and third central moment of this distribution have been determined[49]

where E(X), V(X) and T(X) are the mean, variance, and third central moment respectively.

One of the main practical uses of the Gaussian law is to model the empirical distributions of many different random variables encountered in practice. In such case a possible extension would be a richer family of distributions, having more than two parameters and therefore being able to fit the empirical distribution more accurately. The examples of such extensions are:

- Pearson distribution — a four-parameter family of probability distributions that extend the normal law to include different skewness and kurtosis values.

- The generalized normal distribution, also known as the exponential power distribution, allows for distribution tails with thicker or thinner asymptotic behaviors.

Statistical inference

Estimation of parameters

It is often the case that we do not know the parameters of the normal distribution, but instead want to estimate them. That is, having a sample from a normal population we would like to learn the approximate values of parameters and . The standard approach to this problem is the maximum likelihood method, which requires maximization of the log-likelihood function:

Taking derivatives with respect to and and solving the resulting system of first order conditions yields the maximum likelihood estimates:

Sample mean

Estimator is called the sample mean, since it is the arithmetic mean of all observations. The statistic is complete and sufficient for , and therefore by the Lehmann–Scheffé theorem, is the uniformly minimum variance unbiased (UMVU) estimator.[50] In finite samples it is distributed normally:

The variance of this estimator is equal to the μμ-element of the inverse Fisher information matrix . This implies that the estimator is finite-sample efficient. Of practical importance is the fact that the standard error of is proportional to , that is, if one wishes to decrease the standard error by a factor of 10, one must increase the number of points in the sample by a factor of 100. This fact is widely used in determining sample sizes for opinion polls and the number of trials in Monte Carlo simulations.

From the standpoint of the asymptotic theory, is consistent, that is, it converges in probability to as . The estimator is also asymptotically normal, which is a simple corollary of the fact that it is normal in finite samples:

Sample variance

The estimator is called the sample variance, since it is the variance of the sample (). In practice, another estimator is often used instead of the . This other estimator is denoted , and is also called the sample variance, which represents a certain ambiguity in terminology; its square root is called the sample standard deviation. The estimator differs from by having (n − 1) instead of n in the denominator (the so-called Bessel's correction):

The difference between and becomes negligibly small for large n's. In finite samples however, the motivation behind the use of is that it is an unbiased estimator of the underlying parameter , whereas is biased. Also, by the Lehmann–Scheffé theorem the estimator is uniformly minimum variance unbiased (UMVU),[50] which makes it the "best" estimator among all unbiased ones. However it can be shown that the biased estimator is "better" than the in terms of the mean squared error (MSE) criterion. In finite samples both and have scaled chi-squared distribution with (n − 1) degrees of freedom:

The first of these expressions shows that the variance of is equal to , which is slightly greater than the σσ-element of the inverse Fisher information matrix . Thus, is not an efficient estimator for , and moreover, since is UMVU, we can conclude that the finite-sample efficient estimator for does not exist.

Applying the asymptotic theory, both estimators and are consistent, that is they converge in probability to as the sample size . The two estimators are also both asymptotically normal:

In particular, both estimators are asymptotically efficient for .

Confidence intervals

By Cochran's theorem, for normal distributions the sample mean and the sample variance s2 are independent, which means there can be no gain in considering their joint distribution. There is also a converse theorem: if in a sample the sample mean and sample variance are independent, then the sample must have come from the normal distribution. The independence between and s can be employed to construct the so-called t-statistic:

This quantity t has the Student's t-distribution with (n − 1) degrees of freedom, and it is an ancillary statistic (independent of the value of the parameters). Inverting the distribution of this t-statistics will allow us to construct the confidence interval for μ;[51] similarly, inverting the χ2 distribution of the statistic s2 will give us the confidence interval for σ2:[52]

where tk,p and χ 2

k,p are the pth quantiles of the t- and χ2-distributions respectively. These confidence intervals are of the confidence level 1 − α, meaning that the true values μ and σ2 fall outside of these intervals with probability (or significance level) α. In practice people usually take α = 5%, resulting in the 95% confidence intervals.

Approximate formulas can be derived from the asymptotic distributions of and s2:

The approximate formulas become valid for large values of n, and are more convenient for the manual calculation since the standard normal quantiles zα/2 do not depend on n. In particular, the most popular value of α = 5%, results in z0.025 = 1.96.

Normality tests

Normality tests assess the likelihood that the given data set {x1, ..., xn} comes from a normal distribution. Typically the null hypothesis H0 is that the observations are distributed normally with unspecified mean μ and variance σ2, versus the alternative Ha that the distribution is arbitrary. Many tests (over 40) have been devised for this problem. The more prominent of them are outlined below:

Diagnostic plots are more intuitively appealing but subjective at the same time, as they rely on informal human judgement to accept or reject the null hypothesis.

- Q–Q plot, also known as normal probability plot or rankit plot—is a plot of the sorted values from the data set against the expected values of the corresponding quantiles from the standard normal distribution. That is, it's a plot of point of the form (Φ−1(pk), x(k)), where plotting points pk are equal to pk = (k − α)/(n + 1 − 2α) and α is an adjustment constant, which can be anything between 0 and 1. If the null hypothesis is true, the plotted points should approximately lie on a straight line.

- P–P plot – similar to the Q–Q plot, but used much less frequently. This method consists of plotting the points (Φ(z(k)), pk), where . For normally distributed data this plot should lie on a 45° line between (0, 0) and (1, 1).

Goodness-of-fit tests:

Moment-based tests:

- D'Agostino's K-squared test

- Jarque–Bera test

- Shapiro–Wilk test: This is based on the fact that the line in the Q–Q plot has the slope of σ. The test compares the least squares estimate of that slope with the value of the sample variance, and rejects the null hypothesis if these two quantities differ significantly.

Tests based on the empirical distribution function:

- Anderson–Darling test

- Lilliefors test (an adaptation of the Kolmogorov–Smirnov test)

Bayesian analysis of the normal distribution

Bayesian analysis of normally distributed data is complicated by the many different possibilities that may be considered:

- Either the mean, or the variance, or neither, may be considered a fixed quantity.

- When the variance is unknown, analysis may be done directly in terms of the variance, or in terms of the precision, the reciprocal of the variance. The reason for expressing the formulas in terms of precision is that the analysis of most cases is simplified.

- Both univariate and multivariate cases need to be considered.

- Either conjugate or improper prior distributions may be placed on the unknown variables.

- An additional set of cases occurs in Bayesian linear regression, where in the basic model the data is assumed to be normally distributed, and normal priors are placed on the regression coefficients. The resulting analysis is similar to the basic cases of independent identically distributed data.

The formulas for the non-linear-regression cases are summarized in the conjugate prior article.

Sum of two quadratics

Scalar form

The following auxiliary formula is useful for simplifying the posterior update equations, which otherwise become fairly tedious.

This equation rewrites the sum of two quadratics in x by expanding the squares, grouping the terms in x, and completing the square. Note the following about the complex constant factors attached to some of the terms:

- The factor has the form of a weighted average of y and z.

- This shows that this factor can be thought of as resulting from a situation where the reciprocals of quantities a and b add directly, so to combine a and b themselves, it's necessary to reciprocate, add, and reciprocate the result again to get back into the original units. This is exactly the sort of operation performed by the harmonic mean, so it is not surprising that is one-half the harmonic mean of a and b.

Vector form

A similar formula can be written for the sum of two vector quadratics: If x, y, z are vectors of length k, and A and B are symmetric, invertible matrices of size , then

where

Note that the form x′ A x is called a quadratic form and is a scalar:

In other words, it sums up all possible combinations of products of pairs of elements from x, with a separate coefficient for each. In addition, since , only the sum matters for any off-diagonal elements of A, and there is no loss of generality in assuming that A is symmetric. Furthermore, if A is symmetric, then the form

Sum of differences from the mean

Another useful formula is as follows:

With known variance

For a set of i.i.d. normally distributed data points X of size n where each individual point x follows with known variance σ2, the conjugate prior distribution is also normally distributed.

This can be shown more easily by rewriting the variance as the precision, i.e. using τ = 1/σ2. Then if and we proceed as follows.

First, the likelihood function is (using the formula above for the sum of differences from the mean):

Then, we proceed as follows:

In the above derivation, we used the formula above for the sum of two quadratics and eliminated all constant factors not involving μ. The result is the kernel of a normal distribution, with mean and precision , i.e.

This can be written as a set of Bayesian update equations for the posterior parameters in terms of the prior parameters:

That is, to combine n data points with total precision of nτ (or equivalently, total variance of n/σ2) and mean of values , derive a new total precision simply by adding the total precision of the data to the prior total precision, and form a new mean through a precision-weighted average, i.e. a weighted average of the data mean and the prior mean, each weighted by the associated total precision. This makes logical sense if the precision is thought of as indicating the certainty of the observations: In the distribution of the posterior mean, each of the input components is weighted by its certainty, and the certainty of this distribution is the sum of the individual certainties. (For the intuition of this, compare the expression "the whole is (or is not) greater than the sum of its parts". In addition, consider that the knowledge of the posterior comes from a combination of the knowledge of the prior and likelihood, so it makes sense that we are more certain of it than of either of its components.)

The above formula reveals why it is more convenient to do Bayesian analysis of conjugate priors for the normal distribution in terms of the precision. The posterior precision is simply the sum of the prior and likelihood precisions, and the posterior mean is computed through a precision-weighted average, as described above. The same formulas can be written in terms of variance by reciprocating all the precisions, yielding the more ugly formulas

With known mean

For a set of i.i.d. normally distributed data points X of size n where each individual point x follows with known mean μ, the conjugate prior of the variance has an inverse gamma distribution or a scaled inverse chi-squared distribution. The two are equivalent except for having different parameterizations. Although the inverse gamma is more commonly used, we use the scaled inverse chi-squared for the sake of convenience. The prior for σ2 is as follows:

The likelihood function from above, written in terms of the variance, is:

where

Then:

The above is also a scaled inverse chi-squared distribution where

or equivalently

Reparameterizing in terms of an inverse gamma distribution, the result is:

With unknown mean and unknown variance

For a set of i.i.d. normally distributed data points X of size n where each individual point x follows with unknown mean μ and unknown variance σ2, a combined (multivariate) conjugate prior is placed over the mean and variance, consisting of a normal-inverse-gamma distribution. Logically, this originates as follows:

- From the analysis of the case with unknown mean but known variance, we see that the update equations involve sufficient statistics computed from the data consisting of the mean of the data points and the total variance of the data points, computed in turn from the known variance divided by the number of data points.

- From the analysis of the case with unknown variance but known mean, we see that the update equations involve sufficient statistics over the data consisting of the number of data points and sum of squared deviations.

- Keep in mind that the posterior update values serve as the prior distribution when further data is handled. Thus, we should logically think of our priors in terms of the sufficient statistics just described, with the same semantics kept in mind as much as possible.

- To handle the case where both mean and variance are unknown, we could place independent priors over the mean and variance, with fixed estimates of the average mean, total variance, number of data points used to compute the variance prior, and sum of squared deviations. Note however that in reality, the total variance of the mean depends on the unknown variance, and the sum of squared deviations that goes into the variance prior (appears to) depend on the unknown mean. In practice, the latter dependence is relatively unimportant: Shifting the actual mean shifts the generated points by an equal amount, and on average the squared deviations will remain the same. This is not the case, however, with the total variance of the mean: As the unknown variance increases, the total variance of the mean will increase proportionately, and we would like to capture this dependence.

- This suggests that we create a conditional prior of the mean on the unknown variance, with a hyperparameter specifying the mean of the pseudo-observations associated with the prior, and another parameter specifying the number of pseudo-observations. This number serves as a scaling parameter on the variance, making it possible to control the overall variance of the mean relative to the actual variance parameter. The prior for the variance also has two hyperparameters, one specifying the sum of squared deviations of the pseudo-observations associated with the prior, and another specifying once again the number of pseudo-observations. Note that each of the priors has a hyperparameter specifying the number of pseudo-observations, and in each case this controls the relative variance of that prior. These are given as two separate hyperparameters so that the variance (aka the confidence) of the two priors can be controlled separately.

- This leads immediately to the normal-inverse-gamma distribution, which is the product of the two distributions just defined, with conjugate priors used (an inverse gamma distribution over the variance, and a normal distribution over the mean, conditional on the variance) and with the same four parameters just defined.

The priors are normally defined as follows:

The update equations can be derived, and look as follows:

The respective numbers of pseudo-observations add the number of actual observations to them. The new mean hyperparameter is once again a weighted average, this time weighted by the relative numbers of observations. Finally, the update for is similar to the case with known mean, but in this case the sum of squared deviations is taken with respect to the observed data mean rather than the true mean, and as a result a new "interaction term" needs to be added to take care of the additional error source stemming from the deviation between prior and data mean.

The prior distributions are

Therefore, the joint prior is

The likelihood function from the section above with known variance is:

Writing it in terms of variance rather than precision, we get:

where

Therefore, the posterior is (dropping the hyperparameters as conditioning factors):

In other words, the posterior distribution has the form of a product of a normal distribution over p(μ σ2) times an inverse gamma distribution over p(σ2), with parameters that are the same as the update equations above.

Occurrence and applications

The occurrence of normal distribution in practical problems can be loosely classified into four categories:

- Exactly normal distributions;

- Approximately normal laws, for example when such approximation is justified by the central limit theorem; and

- Distributions modeled as normal – the normal distribution being the distribution with maximum entropy for a given mean and variance.

- Regression problems – the normal distribution being found after systematic effects have been modeled sufficiently well.

Exact normality

Certain quantities in physics are distributed normally, as was first demonstrated by James Clerk Maxwell. Examples of such quantities are:

- Probability density function of a ground state in a quantum harmonic oscillator.

- The position of a particle that experiences diffusion. If initially the particle is located at a specific point (that is its probability distribution is the Dirac delta function), then after time t its location is described by a normal distribution with variance t, which satisfies the diffusion equation . If the initial location is given by a certain density function , then the density at time t is the convolution of g and the normal PDF.

Approximate normality

Approximately normal distributions occur in many situations, as explained by the central limit theorem. When the outcome is produced by many small effects acting additively and independently, its distribution will be close to normal. The normal approximation will not be valid if the effects act multiplicatively (instead of additively), or if there is a single external influence that has a considerably larger magnitude than the rest of the effects.

- In counting problems, where the central limit theorem includes a discrete-to-continuum approximation and where infinitely divisible and decomposable distributions are involved, such as

- Binomial random variables, associated with binary response variables;

- Poisson random variables, associated with rare events;

- Thermal radiation has a Bose–Einstein distribution on very short time scales, and a normal distribution on longer timescales due to the central limit theorem.

Assumed normality

I can only recognize the occurrence of the normal curve – the Laplacian curve of errors – as a very abnormal phenomenon. It is roughly approximated to in certain distributions; for this reason, and on account for its beautiful simplicity, we may, perhaps, use it as a first approximation, particularly in theoretical investigations.

There are statistical methods to empirically test that assumption; see the above Normality tests section.

- In biology, the logarithm of various variables tend to have a normal distribution, that is, they tend to have a log-normal distribution (after separation on male/female subpopulations), with examples including:

- Measures of size of living tissue (length, height, skin area, weight);[53]

- The length of inert appendages (hair, claws, nails, teeth) of biological specimens, in the direction of growth; presumably the thickness of tree bark also falls under this category;

- Certain physiological measurements, such as blood pressure of adult humans.

- In finance, in particular the Black–Scholes model, changes in the logarithm of exchange rates, price indices, and stock market indices are assumed normal (these variables behave like compound interest, not like simple interest, and so are multiplicative). Some mathematicians such as Benoit Mandelbrot have argued that log-Levy distributions, which possesses heavy tails would be a more appropriate model, in particular for the analysis for stock market crashes. The use of the assumption of normal distribution occurring in financial models has also been criticized by Nassim Nicholas Taleb in his works.

- Measurement errors in physical experiments are often modeled by a normal distribution. This use of a normal distribution does not imply that one is assuming the measurement errors are normally distributed, rather using the normal distribution produces the most conservative predictions possible given only knowledge about the mean and variance of the errors.[54]

- In standardized testing, results can be made to have a normal distribution by either selecting the number and difficulty of questions (as in the IQ test) or transforming the raw test scores into "output" scores by fitting them to the normal distribution. For example, the SAT's traditional range of 200–800 is based on a normal distribution with a mean of 500 and a standard deviation of 100.

- Many scores are derived from the normal distribution, including percentile ranks ("percentiles" or "quantiles"), normal curve equivalents, stanines, z-scores, and T-scores. Additionally, some behavioral statistical procedures assume that scores are normally distributed; for example, t-tests and ANOVAs. Bell curve grading assigns relative grades based on a normal distribution of scores.

- In hydrology the distribution of long duration river discharge or rainfall, e.g. monthly and yearly totals, is often thought to be practically normal according to the central limit theorem.[55] The blue picture, made with CumFreq, illustrates an example of fitting the normal distribution to ranked October rainfalls showing the 90% confidence belt based on the binomial distribution. The rainfall data are represented by plotting positions as part of the cumulative frequency analysis.

Methodological problems and peer review

John Ioannidis argues that using normally distributed standard deviations as standards for validating research findings leave falsifiable predictions about phenomena that are not normally distributed untested. This includes, for example, phenomena that only appear when all necessary conditions are present and one cannot be a substitute for another in an addition-like way and phenomena that are not randomly distributed. Ioannidis argues that standard deviation-centered validation gives a false appearance of validity to hypotheses and theories where some but not all falsifiable predictions are normally distributed since the portion of falsifiable predictions that there is evidence against may and in some cases are in the non-normally distributed parts of the range of faslsifiable predictions, as well as baselessly dismissing hypotheses for which none of the falsifiable predictions are normally distributed as if were they unfalsifiable when in fact they do make falsifiable predictions. It is argued by Ioannidis that many cases of mutually exclusive theories being accepted as "validated" by research journals are caused by failure of the journals to take in empirical falsifications of non-normally distributed predictions, and not because mutually exclusive theories are true, which they cannot be, although two mutually exclusive theories can both be wrong and a third one correct.[56]

Computational methods

Generating values from normal distribution

In computer simulations, especially in applications of the Monte-Carlo method, it is often desirable to generate values that are normally distributed. The algorithms listed below all generate the standard normal deviates, since a N(μ, σ2) can be generated as X = μ + σZ, where Z is standard normal. All these algorithms rely on the availability of a random number generator U capable of producing uniform random variates.

- The most straightforward method is based on the probability integral transform property: if U is distributed uniformly on (0,1), then Φ−1(U) will have the standard normal distribution. The drawback of this method is that it relies on calculation of the probit function Φ−1, which cannot be done analytically. Some approximate methods are described in Hart (1968) and in the erf article. Wichura gives a fast algorithm for computing this function to 16 decimal places,[57] which is used by R to compute random variates of the normal distribution.

- An easy-to-program approximate approach that relies on the central limit theorem is as follows: generate 12 uniform U(0,1) deviates, add them all up, and subtract 6 – the resulting random variable will have approximately standard normal distribution. In truth, the distribution will be Irwin–Hall, which is a 12-section eleventh-order polynomial approximation to the normal distribution. This random deviate will have a limited range of (−6, 6).[58] Note that in a true normal distribution, only 0.00034% of all samples will fall outside ±6σ.

- The Box–Muller method uses two independent random numbers U and V distributed uniformly on (0,1). Then the two random variables X and Y will both have the standard normal distribution, and will be independent. This formulation arises because for a bivariate normal random vector (X, Y) the squared norm X2 + Y2 will have the chi-squared distribution with two degrees of freedom, which is an easily generated exponential random variable corresponding to the quantity −2ln(U) in these equations; and the angle is distributed uniformly around the circle, chosen by the random variable V.

- The Marsaglia polar method is a modification of the Box–Muller method which does not require computation of the sine and cosine functions. In this method, U and V are drawn from the uniform (−1,1) distribution, and then S = U2 + V2 is computed. If S is greater or equal to 1, then the method starts over, otherwise the two quantities are returned. Again, X and Y are independent, standard normal random variables.

- The Ratio method[59] is a rejection method. The algorithm proceeds as follows:

- Generate two independent uniform deviates U and V;

- Compute X = √8/e (V − 0.5)/U;

- Optional: if X2 ≤ 5 − 4e1/4U then accept X and terminate algorithm;

- Optional: if X2 ≥ 4e−1.35/U + 1.4 then reject X and start over from step 1;

- If X2 ≤ −4 lnU then accept X, otherwise start over the algorithm.

- The two optional steps allow the evaluation of the logarithm in the last step to be avoided in most cases. These steps can be greatly improved[60] so that the logarithm is rarely evaluated.

- The ziggurat algorithm[61] is faster than the Box–Muller transform and still exact. In about 97% of all cases it uses only two random numbers, one random integer and one random uniform, one multiplication and an if-test. Only in 3% of the cases, where the combination of those two falls outside the "core of the ziggurat" (a kind of rejection sampling using logarithms), do exponentials and more uniform random numbers have to be employed.

- Integer arithmetic can be used to sample from the standard normal distribution.[62] This method is exact in the sense that it satisfies the conditions of ideal approximation;[63] i.e., it is equivalent to sampling a real number from the standard normal distribution and rounding this to the nearest representable floating point number.

- There is also some investigation[64] into the connection between the fast Hadamard transform and the normal distribution, since the transform employs just addition and subtraction and by the central limit theorem random numbers from almost any distribution will be transformed into the normal distribution. In this regard a series of Hadamard transforms can be combined with random permutations to turn arbitrary data sets into a normally distributed data.

Numerical approximations for the normal CDF and normal quantile function

The standard normal CDF is widely used in scientific and statistical computing.

The values Φ(x) may be approximated very accurately by a variety of methods, such as numerical integration, Taylor series, asymptotic series and continued fractions. Different approximations are used depending on the desired level of accuracy.

- Zelen & Severo (1964) give the approximation for Φ(x) for x > 0 with the absolute error ε(x) < 7.5·10−8 (algorithm 26.2.17): where ϕ(x) is the standard normal PDF, and b0 = 0.2316419, b1 = 0.319381530, b2 = −0.356563782, b3 = 1.781477937, b4 = −1.821255978, b5 = 1.330274429.

- Hart (1968) lists some dozens of approximations – by means of rational functions, with or without exponentials – for the erfc() function. His algorithms vary in the degree of complexity and the resulting precision, with maximum absolute precision of 24 digits. An algorithm by West (2009) combines Hart's algorithm 5666 with a continued fraction approximation in the tail to provide a fast computation algorithm with a 16-digit precision.

- Cody (1969) after recalling Hart68 solution is not suited for erf, gives a solution for both erf and erfc, with maximal relative error bound, via Rational Chebyshev Approximation.

- Marsaglia (2004) suggested a simple algorithm[note 1] based on the Taylor series expansion for calculating Φ(x) with arbitrary precision. The drawback of this algorithm is comparatively slow calculation time (for example it takes over 300 iterations to calculate the function with 16 digits of precision when x = 10).

- The GNU Scientific Library calculates values of the standard normal CDF using Hart's algorithms and approximations with Chebyshev polynomials.

Shore (1982) introduced simple approximations that may be incorporated in stochastic optimization models of engineering and operations research, like reliability engineering and inventory analysis. Denoting p = Φ(z), the simplest approximation for the quantile function is:

This approximation delivers for z a maximum absolute error of 0.026 (for 0.5 ≤ p ≤ 0.9999, corresponding to 0 ≤ z ≤ 3.719). For p < 1/2 replace p by 1 − p and change sign. Another approximation, somewhat less accurate, is the single-parameter approximation:

The latter had served to derive a simple approximation for the loss integral of the normal distribution, defined by

This approximation is particularly accurate for the right far-tail (maximum error of 10−3 for z≥1.4). Highly accurate approximations for the CDF, based on Response Modeling Methodology (RMM, Shore, 2011, 2012), are shown in Shore (2005).

Some more approximations can be found at: Error function#Approximation with elementary functions. In particular, small relative error on the whole domain for the CDF and the quantile function as well, is achieved via an explicitly invertible formula by Sergei Winitzki in 2008.

History

Development

Some authors[65][66] attribute the credit for the discovery of the normal distribution to de Moivre, who in 1738[note 2] published in the second edition of his "The Doctrine of Chances" the study of the coefficients in the binomial expansion of (a + b)n. De Moivre proved that the middle term in this expansion has the approximate magnitude of , and that "If m or 1/2n be a Quantity infinitely great, then the Logarithm of the Ratio, which a Term distant from the middle by the Interval ℓ, has to the middle Term, is ."[67] Although this theorem can be interpreted as the first obscure expression for the normal probability law, Stigler points out that de Moivre himself did not interpret his results as anything more than the approximate rule for the binomial coefficients, and in particular de Moivre lacked the concept of the probability density function.[68]

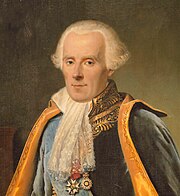

In 1823 Gauss published his monograph "Theoria combinationis observationum erroribus minimis obnoxiae" where among other things he introduces several important statistical concepts, such as the method of least squares, the method of maximum likelihood, and the normal distribution. Gauss used M, M′, M′′, ... to denote the measurements of some unknown quantity V, and sought the "most probable" estimator of that quantity: the one that maximizes the probability φ(M − V) · φ(M′ − V) · φ(M′′ − V) · ... of obtaining the observed experimental results. In his notation φΔ is the probability density function of the measurement errors of magnitude Δ. Not knowing what the function φ is, Gauss requires that his method should reduce to the well-known answer: the arithmetic mean of the measured values.[note 3] Starting from these principles, Gauss demonstrates that the only law that rationalizes the choice of arithmetic mean as an estimator of the location parameter, is the normal law of errors:[69]

Although Gauss was the first to suggest the normal distribution law, Laplace made significant contributions.[note 4] It was Laplace who first posed the problem of aggregating several observations in 1774,[71] although his own solution led to the Laplacian distribution. It was Laplace who first calculated the value of the integral ∫ e−t2 dt = √π in 1782, providing the normalization constant for the normal distribution.[72] Finally, it was Laplace who in 1810 proved and presented to the Academy the fundamental central limit theorem, which emphasized the theoretical importance of the normal distribution.[73]

It is of interest to note that in 1809 an Irish-American mathematician Robert Adrain published two insightful but flawed derivations of the normal probability law, simultaneously and independently from Gauss.[74] His works remained largely unnoticed by the scientific community, until in 1871 they were exhumed by Abbe.[75]

In the middle of the 19th century Maxwell demonstrated that the normal distribution is not just a convenient mathematical tool, but may also occur in natural phenomena:[76] "The number of particles whose velocity, resolved in a certain direction, lies between x and x + dx is

Naming

Since its introduction, the normal distribution has been known by many different names: the law of error, the law of facility of errors, Laplace's second law, Gaussian law, etc. Gauss himself apparently coined the term with reference to the "normal equations" involved in its applications, with normal having its technical meaning of orthogonal rather than "usual".[77] However, by the end of the 19th century some authors[note 5] had started using the name normal distribution, where the word "normal" was used as an adjective – the term now being seen as a reflection of the fact that this distribution was seen as typical, common – and thus "normal". Peirce (one of those authors) once defined "normal" thus: "...the 'normal' is not the average (or any other kind of mean) of what actually occurs, but of what would, in the long run, occur under certain circumstances."[78] Around the turn of the 20th century Pearson popularized the term normal as a designation for this distribution.[79]

Many years ago I called the Laplace–Gaussian curve the normal curve, which name, while it avoids an international question of priority, has the disadvantage of leading people to believe that all other distributions of frequency are in one sense or another 'abnormal'.

Also, it was Pearson who first wrote the distribution in terms of the standard deviation σ as in modern notation. Soon after this, in year 1915, Fisher added the location parameter to the formula for normal distribution, expressing it in the way it is written nowadays:

The term "standard normal", which denotes the normal distribution with zero mean and unit variance came into general use around the 1950s, appearing in the popular textbooks by P. G. Hoel (1947) "Introduction to mathematical statistics" and A. M. Mood (1950) "Introduction to the theory of statistics".[80]

See also

- Bates distribution – similar to the Irwin–Hall distribution, but rescaled back into the 0 to 1 range

- Behrens–Fisher problem – the long-standing problem of testing whether two normal samples with different variances have same means;

- Bhattacharyya distance – method used to separate mixtures of normal distributions

- Erdős–Kac theorem – on the occurrence of the normal distribution in number theory

- Full width at half maximum

- Gaussian blur – convolution, which uses the normal distribution as a kernel

- Modified half-normal distribution

- Normally distributed and uncorrelated does not imply independent

- Ratio normal distribution

- Reciprocal normal distribution

- Standard normal table

- Stein's lemma

- Sub-Gaussian distribution

- Sum of normally distributed random variables

- Tweedie distribution – The normal distribution is a member of the family of Tweedie exponential dispersion models.

- Wrapped normal distribution – the Normal distribution applied to a circular domain

- Z-test – using the normal distribution

Notes

- ^ For example, this algorithm is given in the article Bc programming language.

- ^ De Moivre first published his findings in 1733, in a pamphlet "Approximatio ad Summam Terminorum Binomii (a + b)n in Seriem Expansi" that was designated for private circulation only. But it was not until the year 1738 that he made his results publicly available. The original pamphlet was reprinted several times, see for example Walker (1985).

- ^ "It has been customary certainly to regard as an axiom the hypothesis that if any quantity has been determined by several direct observations, made under the same circumstances and with equal care, the arithmetical mean of the observed values affords the most probable value, if not rigorously, yet very nearly at least, so that it is always most safe to adhere to it." — Gauss (1809, section 177)

- ^ "My custom of terming the curve the Gauss–Laplacian or normal curve saves us from proportioning the merit of discovery between the two great astronomer mathematicians." quote from Pearson (1905, p. 189)

- ^ Besides those specifically referenced here, such use is encountered in the works of Peirce, Galton (Galton (1889, chapter V)) and Lexis (Lexis (1878), Rohrbasser & Véron (2003)) c. 1875.[citation needed]

References

Citations

- ^ Weisstein, Eric W. "Normal Distribution". mathworld.wolfram.com. Retrieved August 15, 2020.

- ^ Normal Distribution, Gale Encyclopedia of Psychology

- ^ Casella & Berger (2001, p. 102)

- ^ Lyon, A. (2014). Why are Normal Distributions Normal?, The British Journal for the Philosophy of Science.

- ^ a b "Normal Distribution". www.mathsisfun.com. Retrieved August 15, 2020.

- ^ Stigler (1982)

- ^ Halperin, Hartley & Hoel (1965, item 7)

- ^ McPherson (1990, p. 110)

- ^ Bernardo & Smith (2000, p. 121)

- ^ Scott, Clayton; Nowak, Robert (August 7, 2003). "The Q-function". Connexions.

- ^ Barak, Ohad (April 6, 2006). "Q Function and Error Function" (PDF). Tel Aviv University. Archived from the original (PDF) on March 25, 2009.

- ^ Weisstein, Eric W. "Normal Distribution Function". MathWorld.

- ^ Abramowitz, Milton; Stegun, Irene Ann, eds. (1983) [June 1964]. "Chapter 26, eqn 26.2.12". Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Applied Mathematics Series. Vol. 55 (Ninth reprint with additional corrections of tenth original printing with corrections (December 1972); first ed.). Washington D.C.; New York: United States Department of Commerce, National Bureau of Standards; Dover Publications. p. 932. ISBN 978-0-486-61272-0. LCCN 64-60036. MR 0167642. LCCN 65-12253.

- ^ "Wolfram Alpha: Computational Knowledge Engine". Wolframalpha.com. Retrieved March 3, 2017.

- ^ "Wolfram Alpha: Computational Knowledge Engine". Wolframalpha.com.

- ^ "Wolfram Alpha: Computational Knowledge Engine". Wolframalpha.com. Retrieved March 3, 2017.

- ^ Reference needed

- ^ Cover, Thomas M.; Thomas, Joy A. (2006). Elements of Information Theory. John Wiley and Sons. p. 254. ISBN 9780471748816.

- ^ Park, Sung Y.; Bera, Anil K. (2009). "Maximum Entropy Autoregressive Conditional Heteroskedasticity Model" (PDF). Journal of Econometrics. 150 (2): 219–230. CiteSeerX 10.1.1.511.9750. doi:10.1016/j.jeconom.2008.12.014. Archived from the original (PDF) on March 7, 2016. Retrieved June 2, 2011.

- ^ Geary RC(1936) The distribution of the "Student's" ratio for the non-normal samples". Supplement to the Journal of the Royal Statistical Society 3 (2): 178–184

- ^ Lukacs, Eugene (March 1942). "A Characterization of the Normal Distribution". Annals of Mathematical Statistics. 13 (1): 91–93. doi:10.1214/AOMS/1177731647. ISSN 0003-4851. JSTOR 2236166. MR 0006626. Zbl 0060.28509. Wikidata Q55897617.

- ^ a b c Patel & Read (1996, [2.1.4])

- ^ Fan (1991, p. 1258)

- ^ Patel & Read (1996, [2.1.8])

- ^ Papoulis, Athanasios. Probability, Random Variables and Stochastic Processes (4th ed.). p. 148.

- ^ Bryc (1995, p. 23)

- ^ Bryc (1995, p. 24)

- ^ Cover & Thomas (2006, p. 254)

- ^ Williams, David (2001). Weighing the odds : a course in probability and statistics (Reprinted. ed.). Cambridge [u.a.]: Cambridge Univ. Press. pp. 197–199. ISBN 978-0-521-00618-7.

- ^ Smith, José M. Bernardo; Adrian F. M. (2000). Bayesian theory (Reprint ed.). Chichester [u.a.]: Wiley. pp. 209, 366. ISBN 978-0-471-49464-5.

- ^ O'Hagan, A. (1994) Kendall's Advanced Theory of statistics, Vol 2B, Bayesian Inference, Edward Arnold. ISBN 0-340-52922-9 (Section 5.40)

- ^ a b Bryc (1995, p. 35)

- ^ UIUC, Lecture 21. The Multivariate Normal Distribution, 21.6:"Individually Gaussian Versus Jointly Gaussian".

- ^ Edward L. Melnick and Aaron Tenenbein, "Misspecifications of the Normal Distribution", The American Statistician, volume 36, number 4 November 1982, pages 372–373

- ^ "Kullback Leibler (KL) Distance of Two Normal (Gaussian) Probability Distributions". Allisons.org. December 5, 2007. Retrieved March 3, 2017.

- ^ Jordan, Michael I. (February 8, 2010). "Stat260: Bayesian Modeling and Inference: The Conjugate Prior for the Normal Distribution" (PDF).

- ^ Amari & Nagaoka (2000)

- ^ "Normal Approximation to Poisson Distribution". Stat.ucla.edu. Retrieved March 3, 2017.

- ^ a b Das, Abhranil (2020). "A method to integrate and classify normal distributions". arXiv:2012.14331 [stat.ML].

- ^ Bryc (1995, p. 27)

- ^ Weisstein, Eric W. "Normal Product Distribution". MathWorld. wolfram.com.

- ^ Lukacs, Eugene (1942). "A Characterization of the Normal Distribution". The Annals of Mathematical Statistics. 13 (1): 91–3. doi:10.1214/aoms/1177731647. ISSN 0003-4851. JSTOR 2236166.

- ^ Basu, D.; Laha, R. G. (1954). "On Some Characterizations of the Normal Distribution". Sankhyā. 13 (4): 359–62. ISSN 0036-4452. JSTOR 25048183.

- ^ Lehmann, E. L. (1997). Testing Statistical Hypotheses (2nd ed.). Springer. p. 199. ISBN 978-0-387-94919-2.

- ^ Patel & Read (1996, [2.3.6])

- ^ Galambos & Simonelli (2004, Theorem 3.5)

- ^ a b Lukacs & King (1954)

- ^ Quine, M.P. (1993). "On three characterisations of the normal distribution". Probability and Mathematical Statistics. 14 (2): 257–263.

- ^ John, S (1982). "The three parameter two-piece normal family of distributions and its fitting". Communications in Statistics - Theory and Methods. 11 (8): 879–885. doi:10.1080/03610928208828279.

- ^ a b Krishnamoorthy (2006, p. 127)

- ^ Krishnamoorthy (2006, p. 130)

- ^ Krishnamoorthy (2006, p. 133)

- ^ Huxley (1932)

- ^ Jaynes, Edwin T. (2003). Probability Theory: The Logic of Science. Cambridge University Press. pp. 592–593. ISBN 9780521592710.

- ^ Oosterbaan, Roland J. (1994). "Chapter 6: Frequency and Regression Analysis of Hydrologic Data" (PDF). In Ritzema, Henk P. (ed.). Drainage Principles and Applications, Publication 16 (second revised ed.). Wageningen, The Netherlands: International Institute for Land Reclamation and Improvement (ILRI). pp. 175–224. ISBN 978-90-70754-33-4.

- ^ Why Most Published Research Findings Are False, John P. A. Ioannidis, 2005

- ^ Wichura, Michael J. (1988). "Algorithm AS241: The Percentage Points of the Normal Distribution". Applied Statistics. 37 (3): 477–84. doi:10.2307/2347330. JSTOR 2347330.

- ^ Johnson, Kotz & Balakrishnan (1995, Equation (26.48))

- ^ Kinderman & Monahan (1977)

- ^ Leva (1992)

- ^ Marsaglia & Tsang (2000)

- ^ Karney (2016)

- ^ Monahan (1985, section 2)

- ^ Wallace (1996)

- ^ Johnson, Kotz & Balakrishnan (1994, p. 85)

- ^ Le Cam & Lo Yang (2000, p. 74)

- ^ De Moivre, Abraham (1733), Corollary I – see Walker (1985, p. 77)

- ^ Stigler (1986, p. 76)

- ^ Gauss (1809, section 177)

- ^ Gauss (1809, section 179)

- ^ Laplace (1774, Problem III)

- ^ Pearson (1905, p. 189)

- ^ Stigler (1986, p. 144)

- ^ Stigler (1978, p. 243)

- ^ Stigler (1978, p. 244)

- ^ Maxwell (1860, p. 23)

- ^ Jaynes, Edwin J.; Probability Theory: The Logic of Science, Ch. 7.

- ^ Peirce, Charles S. (c. 1909 MS), Collected Papers v. 6, paragraph 327.

- ^ Kruskal & Stigler (1997).

- ^ "Earliest uses... (entry STANDARD NORMAL CURVE)".

Sources

- Aldrich, John; Miller, Jeff. "Earliest Uses of Symbols in Probability and Statistics".

- Aldrich, John; Miller, Jeff. "Earliest Known Uses of Some of the Words of Mathematics". In particular, the entries for "bell-shaped and bell curve", "normal (distribution)", "Gaussian", and "Error, law of error, theory of errors, etc.".

- Amari, Shun-ichi; Nagaoka, Hiroshi (2000). Methods of Information Geometry. Oxford University Press. ISBN 978-0-8218-0531-2.

- Bernardo, José M.; Smith, Adrian F. M. (2000). Bayesian Theory. Wiley. ISBN 978-0-471-49464-5.

- Bryc, Wlodzimierz (1995). The Normal Distribution: Characterizations with Applications. Springer-Verlag. ISBN 978-0-387-97990-8.

- Casella, George; Berger, Roger L. (2001). Statistical Inference (2nd ed.). Duxbury. ISBN 978-0-534-24312-8.

- Cody, William J. (1969). "Rational Chebyshev Approximations for the Error Function". Mathematics of Computation. 23 (107): 631–638. doi:10.1090/S0025-5718-1969-0247736-4.

- Cover, Thomas M.; Thomas, Joy A. (2006). Elements of Information Theory. John Wiley and Sons.

- de Moivre, Abraham (1738). The Doctrine of Chances. ISBN 978-0-8218-2103-9.

- Fan, Jianqing (1991). "On the optimal rates of convergence for nonparametric deconvolution problems". The Annals of Statistics. 19 (3): 1257–1272. doi:10.1214/aos/1176348248. JSTOR 2241949.

- Galton, Francis (1889). Natural Inheritance (PDF). London, UK: Richard Clay and Sons.

- Galambos, Janos; Simonelli, Italo (2004). Products of Random Variables: Applications to Problems of Physics and to Arithmetical Functions. Marcel Dekker, Inc. ISBN 978-0-8247-5402-0.

- Gauss, Carolo Friderico (1809). Theoria motvs corporvm coelestivm in sectionibvs conicis Solem ambientivm [Theory of the Motion of the Heavenly Bodies Moving about the Sun in Conic Sections] (in Latin). Hambvrgi, Svmtibvs F. Perthes et I. H. Besser. English translation.

- Gould, Stephen Jay (1981). The Mismeasure of Man (first ed.). W. W. Norton. ISBN 978-0-393-01489-1.

- Halperin, Max; Hartley, Herman O.; Hoel, Paul G. (1965). "Recommended Standards for Statistical Symbols and Notation. COPSS Committee on Symbols and Notation". The American Statistician. 19 (3): 12–14. doi:10.2307/2681417. JSTOR 2681417.

- Hart, John F.; et al. (1968). Computer Approximations. New York, NY: John Wiley & Sons, Inc. ISBN 978-0-88275-642-4.

- "Normal Distribution", Encyclopedia of Mathematics, EMS Press, 2001 [1994]

- Herrnstein, Richard J.; Murray, Charles (1994). The Bell Curve: Intelligence and Class Structure in American Life. Free Press. ISBN 978-0-02-914673-6.

- Huxley, Julian S. (1932). Problems of Relative Growth. London. ISBN 978-0-486-61114-3. OCLC 476909537.

- Johnson, Norman L.; Kotz, Samuel; Balakrishnan, Narayanaswamy (1994). Continuous Univariate Distributions, Volume 1. Wiley. ISBN 978-0-471-58495-7.

- Johnson, Norman L.; Kotz, Samuel; Balakrishnan, Narayanaswamy (1995). Continuous Univariate Distributions, Volume 2. Wiley. ISBN 978-0-471-58494-0.

- Karney, C. F. F. (2016). "Sampling exactly from the normal distribution". ACM Transactions on Mathematical Software. 42 (1): 3:1–14. arXiv:1303.6257. doi:10.1145/2710016. S2CID 14252035.

- Kinderman, Albert J.; Monahan, John F. (1977). "Computer Generation of Random Variables Using the Ratio of Uniform Deviates". ACM Transactions on Mathematical Software. 3 (3): 257–260. doi:10.1145/355744.355750. S2CID 12884505.

- Krishnamoorthy, Kalimuthu (2006). Handbook of Statistical Distributions with Applications. Chapman & Hall/CRC. ISBN 978-1-58488-635-8.

- Kruskal, William H.; Stigler, Stephen M. (1997). Spencer, Bruce D. (ed.). Normative Terminology: 'Normal' in Statistics and Elsewhere. Statistics and Public Policy. Oxford University Press. ISBN 978-0-19-852341-3.

- Laplace, Pierre-Simon de (1774). "Mémoire sur la probabilité des causes par les événements". Mémoires de l'Académie Royale des Sciences de Paris (Savants étrangers), Tome 6: 621–656. Translated by Stephen M. Stigler in Statistical Science 1 (3), 1986: JSTOR 2245476.

- Laplace, Pierre-Simon (1812). Théorie analytique des probabilités [Analytical theory of probabilities]. Paris, Ve. Courcier.

- Le Cam, Lucien; Lo Yang, Grace (2000). Asymptotics in Statistics: Some Basic Concepts (second ed.). Springer. ISBN 978-0-387-95036-5.

- Leva, Joseph L. (1992). "A fast normal random number generator" (PDF). ACM Transactions on Mathematical Software. 18 (4): 449–453. CiteSeerX 10.1.1.544.5806. doi:10.1145/138351.138364. S2CID 15802663. Archived from the original (PDF) on July 16, 2010.

- Lexis, Wilhelm (1878). "Sur la durée normale de la vie humaine et sur la théorie de la stabilité des rapports statistiques". Annales de Démographie Internationale. Paris. II: 447–462.

- Lukacs, Eugene; King, Edgar P. (1954). "A Property of Normal Distribution". The Annals of Mathematical Statistics. 25 (2): 389–394. doi:10.1214/aoms/1177728796. JSTOR 2236741.

- McPherson, Glen (1990). Statistics in Scientific Investigation: Its Basis, Application and Interpretation. Springer-Verlag. ISBN 978-0-387-97137-7.

- Marsaglia, George; Tsang, Wai Wan (2000). "The Ziggurat Method for Generating Random Variables". Journal of Statistical Software. 5 (8). doi:10.18637/jss.v005.i08.

- Marsaglia, George (2004). "Evaluating the Normal Distribution". Journal of Statistical Software. 11 (4). doi:10.18637/jss.v011.i04.

- Maxwell, James Clerk (1860). "V. Illustrations of the dynamical theory of gases. — Part I: On the motions and collisions of perfectly elastic spheres". Philosophical Magazine. Series 4. 19 (124): 19–32. doi:10.1080/14786446008642818.

- Monahan, J. F. (1985). "Accuracy in random number generation". Mathematics of Computation. 45 (172): 559–568. doi:10.1090/S0025-5718-1985-0804945-X.

- Patel, Jagdish K.; Read, Campbell B. (1996). Handbook of the Normal Distribution (2nd ed.). CRC Press. ISBN 978-0-8247-9342-5.

- Pearson, Karl (1901). "On Lines and Planes of Closest Fit to Systems of Points in Space" (PDF). Philosophical Magazine. 6. 2 (11): 559–572. doi:10.1080/14786440109462720.

- Pearson, Karl (1905). "'Das Fehlergesetz und seine Verallgemeinerungen durch Fechner und Pearson'. A rejoinder". Biometrika. 4 (1): 169–212. doi:10.2307/2331536. JSTOR 2331536.

- Pearson, Karl (1920). "Notes on the History of Correlation". Biometrika. 13 (1): 25–45. doi:10.1093/biomet/13.1.25. JSTOR 2331722.

- Rohrbasser, Jean-Marc; Véron, Jacques (2003). "Wilhelm Lexis: The Normal Length of Life as an Expression of the "Nature of Things"". Population. 58 (3): 303–322. doi:10.3917/pope.303.0303.

- Shore, H (1982). "Simple Approximations for the Inverse Cumulative Function, the Density Function and the Loss Integral of the Normal Distribution". Journal of the Royal Statistical Society. Series C (Applied Statistics). 31 (2): 108–114. doi:10.2307/2347972. JSTOR 2347972.

- Shore, H (2005). "Accurate RMM-Based Approximations for the CDF of the Normal Distribution". Communications in Statistics – Theory and Methods. 34 (3): 507–513. doi:10.1081/sta-200052102. S2CID 122148043.

- Shore, H (2011). "Response Modeling Methodology". WIREs Comput Stat. 3 (4): 357–372. doi:10.1002/wics.151. S2CID 62021374.

- Shore, H (2012). "Estimating Response Modeling Methodology Models". WIREs Comput Stat. 4 (3): 323–333. doi:10.1002/wics.1199. S2CID 122366147.

- Stigler, Stephen M. (1978). "Mathematical Statistics in the Early States". The Annals of Statistics. 6 (2): 239–265. doi:10.1214/aos/1176344123. JSTOR 2958876.

- Stigler, Stephen M. (1982). "A Modest Proposal: A New Standard for the Normal". The American Statistician. 36 (2): 137–138. doi:10.2307/2684031. JSTOR 2684031.

- Stigler, Stephen M. (1986). The History of Statistics: The Measurement of Uncertainty before 1900. Harvard University Press. ISBN 978-0-674-40340-6.

- Stigler, Stephen M. (1999). Statistics on the Table. Harvard University Press. ISBN 978-0-674-83601-3.

- Walker, Helen M. (1985). "De Moivre on the Law of Normal Probability" (PDF). In Smith, David Eugene (ed.). A Source Book in Mathematics. Dover. ISBN 978-0-486-64690-9.

- Wallace, C. S. (1996). "Fast pseudo-random generators for normal and exponential variates". ACM Transactions on Mathematical Software. 22 (1): 119–127. doi:10.1145/225545.225554. S2CID 18514848.

- Weisstein, Eric W. "Normal Distribution". MathWorld.

- West, Graeme (2009). "Better Approximations to Cumulative Normal Functions" (PDF). Wilmott Magazine: 70–76.

- Zelen, Marvin; Severo, Norman C. (1964). Probability Functions (chapter 26). Handbook of mathematical functions with formulas, graphs, and mathematical tables, by Abramowitz, M.; and Stegun, I. A.: National Bureau of Standards. New York, NY: Dover. ISBN 978-0-486-61272-0.

![{\displaystyle {\frac {1}{2}}\left[1+\operatorname {erf} \left({\frac {x-\mu }{\sigma {\sqrt {2}}}}\right)\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/187f33664b79492eedf4406c66d67f9fe5f524ea)

평균은 0이고 분산 및 표준 편차는 1입니다.밀도

평균은 0이고 분산 및 표준 편차는 1입니다.밀도 z

z

z

z

분포의 폭을 정의하는 파라미터로 사용할 것을 권장합니다

분포의 폭을 정의하는 파라미터로 사용할 것을 권장합니다

역수를 정밀도로 정의할 수 있으며, 이 경우 정규분포의 표현은

역수를 정밀도로 정의할 수 있으며, 이 경우 정규분포의 표현은

![[-x,x]](https://wikimedia.org/api/rest_v1/media/math/render/svg/e23c41ff0bd6f01a0e27054c2b85819fcd08b762)

![{\displaystyle \Phi (x)={\frac {1}{2}}\left[1+\operatorname {erf} \left({\frac {x}{\sqrt {2}}}\right)\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c7831a9a5f630df7170fa805c186f4c53219ca36)

![{\displaystyle F(x)=\Phi \left({\frac {x-\mu }{\sigma }}\right)={\frac {1}{2}}\left[1+\operatorname {erf} \left({\frac {x-\mu }{\sigma {\sqrt {2}}}}\right)\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/75deccfbc473d782dacb783f1524abb09b8135c0)

![{\displaystyle \Phi (x)={\frac {1}{2}}+{\frac {1}{\sqrt {2\pi }}}\cdot e^{-x^{2}/2}\left[x+{\frac {x^{3}}{3}}+{\frac {x^{5}}{3\cdot 5}}+\cdots +{\frac {x^{2n+1}}{(2n+1)!!}}+\cdots \right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/54d12af9a3b12a7f859e4e7be105d172b53bcfb8)

벗어납니다.

벗어납니다.

둘레는 대칭입니다.

둘레는 대칭입니다.

x

x

![{\displaystyle \operatorname {E} \left[(X-\mu )^{p}\right]={\begin{cases}0&{\text{if }}p{\text{ is odd,}}\\\sigma ^{p}(p-1)!!&{\text{if }}p{\text{ is even.}}\end{cases}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f1d2c92b62ac2bbe07a8e475faac29c8cc5f7755)

![{\displaystyle {\begin{aligned}\operatorname {E} \left[|X-\mu |^{p}\right]&=\sigma ^{p}(p-1)!!\cdot {\begin{cases}{\sqrt {\frac {2}{\pi }}}&{\text{if }}p{\text{ is odd}}\\1&{\text{if }}p{\text{ is even}}\end{cases}}\\&=\sigma ^{p}\cdot {\frac {2^{p/2}\Gamma \left({\frac {p+1}{2}}\right)}{\sqrt {\pi }}}.\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3b196371c491676efa7ea7770ef56773db7652cd)

![{\displaystyle {\begin{aligned}\operatorname {E} \left[X^{p}\right]&=\sigma ^{p}\cdot (-i{\sqrt {2}})^{p}U\left(-{\frac {p}{2}},{\frac {1}{2}},-{\frac {1}{2}}\left({\frac {\mu }{\sigma }}\right)^{2}\right),\\\operatorname {E} \left[|X|^{p}\right]&=\sigma ^{p}\cdot 2^{p/2}{\frac {\Gamma \left({\frac {1+p}{2}}\right)}{\sqrt {\pi }}}{}_{1}F_{1}\left(-{\frac {p}{2}},{\frac {1}{2}},-{\frac {1}{2}}\left({\frac {\mu }{\sigma }}\right)^{2}\right).\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0c17bf881593b86e728bf5dfbdb41a4b86da3875)

![[a,b]](https://wikimedia.org/api/rest_v1/media/math/render/svg/9c4b788fc5c637e26ee98b45f89a5c08c85f7935) 있는

있는 ![{\displaystyle \operatorname {E} \left[X\mid a<X<b\right]=\mu -\sigma ^{2}{\frac {f(b)-f(a)}{F(b)-F(a)}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d82ec10bf31f0b63137699ae6e2b5a346770b097)

경우 이를

경우 이를

![{\displaystyle M(t)=\operatorname {E} [e^{tX}]={\hat {f}}(it)=e^{\mu t}e^{{\tfrac {1}{2}}\sigma ^{2}t^{2}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/04bbd225c0fee5e58e9a8cd73b0f1b2bf535dc56)

Stein 연산자와 클래스는

Stein 연산자와 클래스는 ![{\displaystyle f:\mathbb {R} \to \mathbb {R} {\mbox{ such that }}\mathbb {E} [|f'(X)|]<\infty }](https://wikimedia.org/api/rest_v1/media/math/render/svg/69d73a6b7e591a67eaff64aaf974a8c37584626e)

대한 변동 L

대한 변동 L 생성합니다.

생성합니다.

최대 2차 다항식이므로

최대 2차 다항식이므로

, 그

, 그

2 (\displaystyle x })

2 (\displaystyle x })

2개의

2개의

정규

정규

평균과 2(

평균과 2(

독립 표준 정규 랜덤 변수인

독립 표준 정규 랜덤 변수인

![{\displaystyle t={\frac {{\overline {X}}-\mu }{S/{\sqrt {n}}}}={\frac {{\frac {1}{n}}(X_{1}+\cdots +X_{n})-\mu }{\sqrt {{\frac {1}{n(n-1)}}\left[(X_{1}-{\overline {X}})^{2}+\cdots +(X_{n}-{\overline {X}})^{2}\right]}}}\sim t_{n-1}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/36ff0d3c79a0504e8f259ef99192b825357914d7)

![{\displaystyle \operatorname {T} (X)={\sqrt {\frac {2}{\pi }}}(\sigma _{2}-\sigma _{1})\left[\left({\frac {4}{\pi }}-1\right)(\sigma _{2}-\sigma _{1})^{2}+\sigma _{1}\sigma _{2}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9959f2c5186e2ed76884054edaf837a602ac6fac)

![{\displaystyle \mu \in \left[{\hat {\mu }}-t_{n-1,1-\alpha /2}{\frac {1}{\sqrt {n}}}s,{\hat {\mu }}+t_{n-1,1-\alpha /2}{\frac {1}{\sqrt {n}}}s\right],}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f6e3068587bfbaf61a549a39b518757119bfb846)

![{\displaystyle \sigma ^{2}\in \left[{\frac {(n-1)s^{2}}{\chi _{n-1,1-\alpha /2}^{2}}},{\frac {(n-1)s^{2}}{\chi _{n-1,\alpha /2}^{2}}}\right],}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3549a31cb861d9e479c232271cb88b019cfa9fd5)

![{\displaystyle \mu \in \left[{\hat {\mu }}-|z_{\alpha /2}|{\frac {1}{\sqrt {n}}}s,{\hat {\mu }}+|z_{\alpha /2}|{\frac {1}{\sqrt {n}}}s\right],}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e13883f93c0a1405e71bd105685ecac6b4c84089)

![{\displaystyle \sigma ^{2}\in \left[s^{2}-|z_{\alpha /2}|{\frac {\sqrt {2}}{\sqrt {n}}}s^{2},s^{2}+|z_{\alpha /2}|{\frac {\sqrt {2}}{\sqrt {n}}}s^{2}\right],}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e3e7c940eb3f6f50af62200ae75e10435ef8dfe6)